AIBOX OS comes with a built-in AI object detection program configured to detect people.

This page explains this program and provides instructions on how to modify it to detect other objects such as animals or vehicles.

Please make sure you are logged into AIBOX OS and have the ability to view and edit files.

AIBOX has a location where the detection program can be placed as follows:

/home/cap/aicap/extmod

The location under /home is not configured as a RAM disk, so there is no need to disable the RAM disk before making changes.

An object detection program using YOLO11 is installed here by default.

For more information about YOLO11, see here

The files provided are as follows:

| File Name | Description |

|---|---|

| start.sh | Shell script called when the main AIBOX program starts |

| stop.sh | Shell script called when the main AIBOX program terminates |

| extmod.py | Python program that performs object detection |

| aicap.py | Defines functions for executing the aicap command |

| yolo11m_ncnn_model |

YOLO11 model (yolo11m.pt) exported in NCNN format For more information about NCNN Export in YOLO11, see here |

| docker-compose.yml | File containing Docker container configuration and settings |

start.sh/stop.sh contain the necessary processing for automatic execution and termination of the detection program. In this program, the processing to start/stop containers using docker compose is written.

Object detection is performed in Python using YOLO11, but instead of using the standard model, we use a lightweight and faster NCNN format conversion.

AIBOX OS comes with a Docker Image pre-registered that can run YOLO11 with NCNN.

REPOSITORY TAG SIZE

aicap/arm64/ultralytics 1.0.250923 3.43GB

This image is based on the YOLO11 image published by Ultralytics, with modules required for ncnn conversion and execution installed, as well as environment configuration for running the aicap command. Therefore, basic detection programs using YOLO11 that run on AIBOX OS can be executed on containers launched from this image.

The built-in AI detection program uses docker compose to start containers, so we place docker-compose.yml. The volumes / environment / network_mode specified in this file are explained below.

volumes:

- /usr/local/aicap:/usr/local/aicap

- /home/cap/aicap:/home/cap/aicap

- /var/www:/var/www

environment:

MODEL_FILE_NAME: yolo11m_ncnn_model

PREVIEW_IMAGE_PATH: /var/www/html/result.jpg

network_mode: host

The volumes parameter maps directories between the host and container. Here we specify three mappings.

The first two are necessary for running the aicap command and Python programs, so please configure them the same way when creating your own detection program.

The third mapping for the Nginx HTTP public directory is necessary when you want to view detection result images in a browser.

| Mapping Source & Destination | Description |

|---|---|

| /usr/local/aicap | Execution directory for aicap command |

| /home/cap/aicap | Location for detection program files and various configuration files |

| /var/www |

Nginx HTTP public directory Used to view detection result images in a browser by saving the detection results images |

By default, public access to the Nginx HTTP public directory is turned OFF.

Environment variables set the following two values required for Python program (extmod.py) execution:

| Environment Variable Name | Description |

|---|---|

| MODEL_FILE_NAME | Name of the YOLO model to use yolo11m_ncnn_model |

| PREVIEW_IMAGE_PATH | Save location for detection result images /var/www/result.jpg |

The aicap command accesses the capture program (cvc) to obtain camera frame images, but cvc is configured to only accept requests from localhost. Therefore, it is necessary to set network_mode to host to share the network between the host and container.

The object detection program (extmod.py) consists of the following two functions and a main function that calls them.

| Function Name | Description |

|---|---|

| create_result_jpeg | Draws red frames for object detection on the camera frame image obtained by get_frame. |

| parse_results | Formats the results from YOLO's predict method into an easy-to-use format. |

Looking at the main function, you will see it is a very simple program.

The processing flow is as follows, which repeats continuously:

[Get camera frame image] → [Run object detection with YOLO] → [Format results] → [Draw detection frame if detected] → [Send push notification] → [Save preview image]

The aicap push command can specify the image to send as an argument.

In this program, we pass a camera frame image with red frames from object detection results written to it as an argument to send.

To change the objects to detect from people to other objects, or to adjust detection accuracy, modify the values of global variables in the program.

To change the objects to detect, modify the value of the CLASSES variable defined on line 51.

#

# Label IDs of objects to detect

# Multiple selections possible (specify as array)

# When using models provided by Yolo,

# specify the index of COCO (Common Objects in Context)

# (excerpt)

# ID Class Name

# 0 person

# 1 bicycle

# 2 car

# 3 motorcycle

# 5 bus

# 16 dog

# 17 horse

# 18 sheep

# 19 cow

# 21 bear

# For example, to detect cars, buses, and motorcycles: CLASSES = [2, 3, 4]

#

CLASSES = [0]

As noted in the comments, you can change the objects to detect by modifying this value.

For example, if you want to detect bears, specify 21.

# 21 bear

CLASSES = [21]

You can also specify multiple objects. For example, to detect both cars and people together, write [0, 2].

# 0 person

# 2 car

CLASSES = [0, 2]

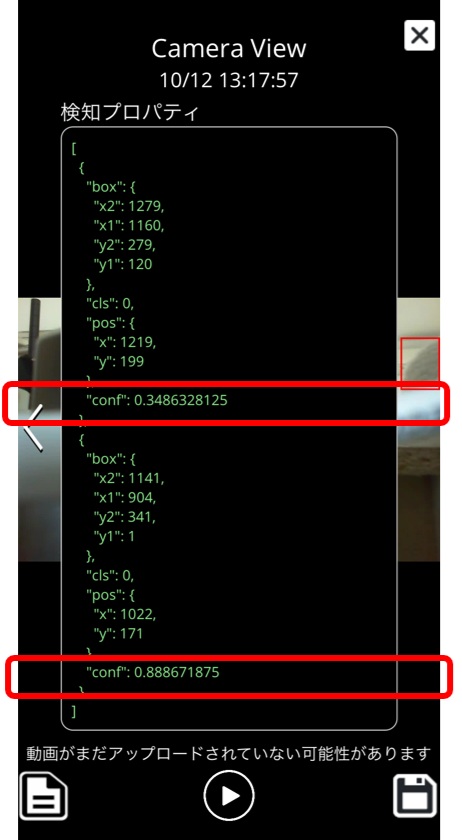

In actual operation, situations arise where there are false detections or missed detections.

In such cases, adjust the parameters of the object detection.

The parameters to adjust are the CONF variable defined on line 24 and the IOU variable defined on line 30.

# confidence threshold

# Detection confidence threshold (0.0 ~ 1.0)

# Detections with a confidence score below this threshold

# are not included in the results

CONF = 0.3

# Intersection over Union

# Overlap of detection boxes (0.0 ~ 1.0)

# YOLO may detect the same object with multiple candidate boxes in an image.

# This is the threshold to determine which boxes to retain as results

# = threshold to avoid duplicate detection

IOU = 0.5

You will mainly adjust the CONF parameter. Simply put, it is the detection threshold. Lowering it detects more objects but also increases false detections. Raising it reduces false detections but increases missed detections.

The optimal values vary depending on the clarity of the camera image and its installation location.

While monitoring the detection result information sent with push notifications, adjust the values so that unnecessary detections are eliminated.

To view detection results in real time, please enable the detection result preview feature.

You will then be able to check the detection results in real time via a web browser.

If you want to switch back to this built-in AI detection program after updating to another detection program, run the update script in the same way as with other programs.

Log in to the AIBOX and run the following command to download

update.zip published on GitHub.

After extracting it, run update.sh to update all required files at once.

$ wget https://github.com/daddyYukio/AICAPTURE/raw/refs/heads/main/programs/built-in-object-detection/update.zip

Once the update is complete, please reboot the AIBOX.